Generative sonification system

Keywords: Generative, Sonification, Multi-sensory, Interactive Experience.

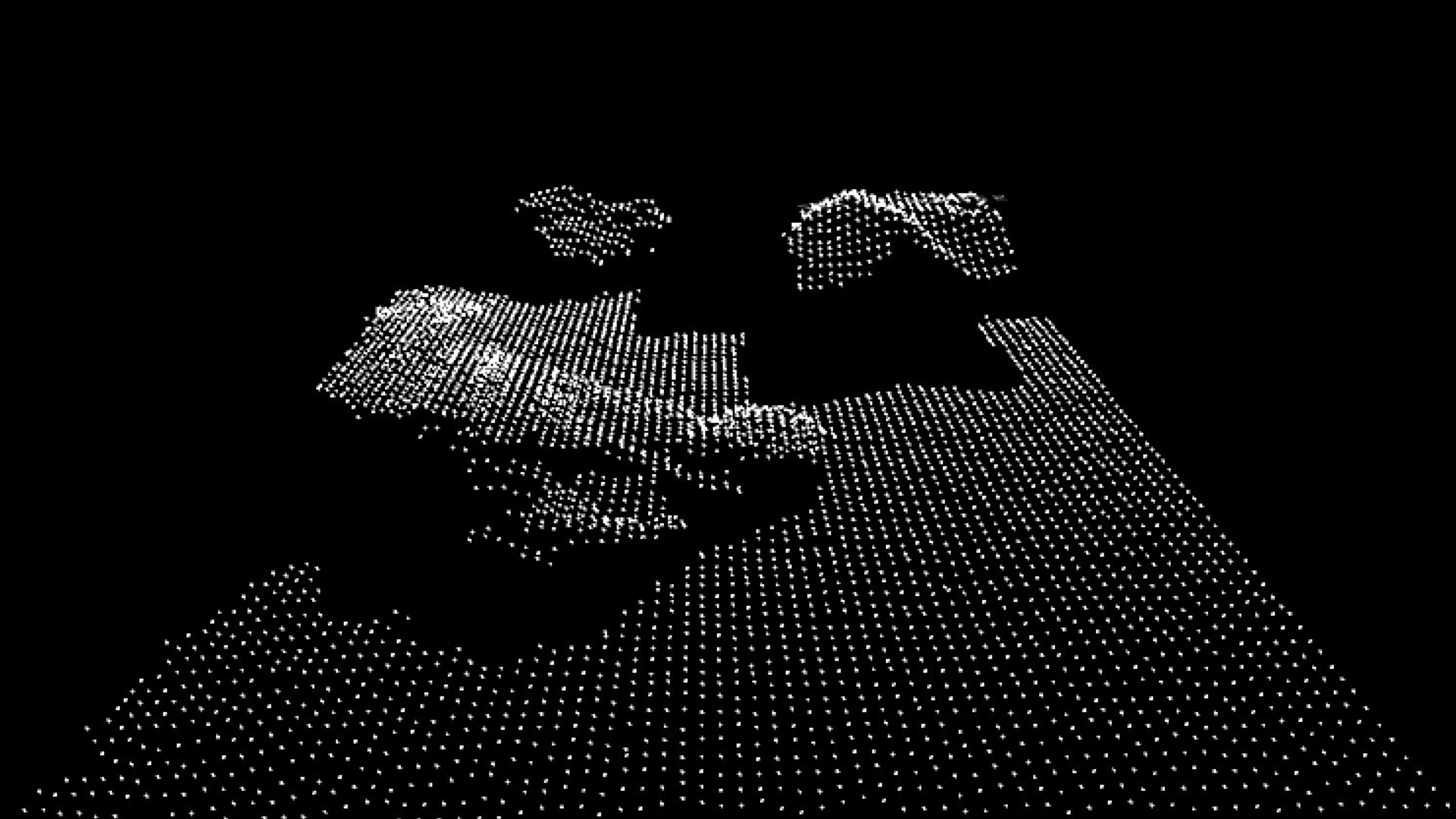

"Stone, Sound Textures" is a generative sonification system that generates sounds from visual data of stones. Visualising three-dimensional forms in real-time, the textures of the stones are mapped in a digital landscape and translated into sound-making markers. As the viewers interact and move the stones within the multi-modal system, the soundscape of textures changes.

What would a texture sound like? We perceive materials mainly through our sight and touch, allowing us to associate its materiality with tactile qualities. While sound is subjectively perceived, we occasionally allocate textural qualities to sounds (eg. Rough or smooth, hard or soft). How do we perceive cognitively recognise visual textures in sound? Interested in the perceptual commonalities between our senses, STONE attempts to connect visual textures to sound textures through computer visualisation of three-dimensional forms to obtain input data for real-time digital audio-synthesis.

The visualisation system uses the P3D environment in Processing, mapping the three-dimensional forms of the stones using depth data obtained from Kinect V1. The digital landscape exists as a point-cloud, which is navigated in 3D space. For hardware, a mounting frame of 0.5 by 0.5 by 0.55m was designed to maintain the minimum vertical distance between the stones and the Kinect. The threshold for the captured distance was scaled digitally to isolate and track specific elements. The digital landscape was calibrated to the dimensions of the horizontal frame, which bounded the physical space for stone-tracking.

Each stone corresponds to a specific sound in the generative process. The system recognises each stone as its own entity and the number of stones in the system. A blob-tracking function was coded on Processing to acquire specific visual data from each stone. The sound generation uses the quantifiable data (the margins and height) of each stone as variables in the virtual synthesisers designed on SuperCollider, a platform for audio synthesis and algorithmic composition. For STONE, the Open Sound Control (OSC) function is used to connect the data flow obtained in Processing to SuperCollider by sending OSC messages through an external network in real-time.

The sonification process consists of a set of virtual synthesisers coded on Supercollider’s live-coding platform. There is a limit of five stones within the set-up and thus, five virtual synthesisers designed for each stone. The sounds were designed as abstract sound textures, which temporal patterns are determined by the variable inputs from the visualisation data. As the stones are introduced in the system and moved, the sounds are triggered and altered, creating an ever-changing composition of layered sound textures.

The multi-modal experience explores the subliminal links across our sensory perceptions of textures across sight, touch and sound. The sounds could be subjectively perceived to correspond to the visual textures of stones. As the stones exist as variable controls of the generated sound, the audience could interact with the set up as an instrumental system.

The Future Vision Online Exhibition was available from 11 February to 11 March 2021.

What you find here is a fleeting glimpse of the 21 works – or in better words, 21 future visions – coming from artists, designers, researchers, professionals, and students who explored complex questions from a critical and creative perspective.

We extend our warmest thanks to everyone who participated in the PCD21.

View all works