Models for Environmental Literacy

Tivon Rice

Department of Digital Art and Experimental Media (DXARTS), University of Washington, US

Video 36'40"

Keywords: AI, Machine Learning, Photogrammetry, Drones, Ecology.

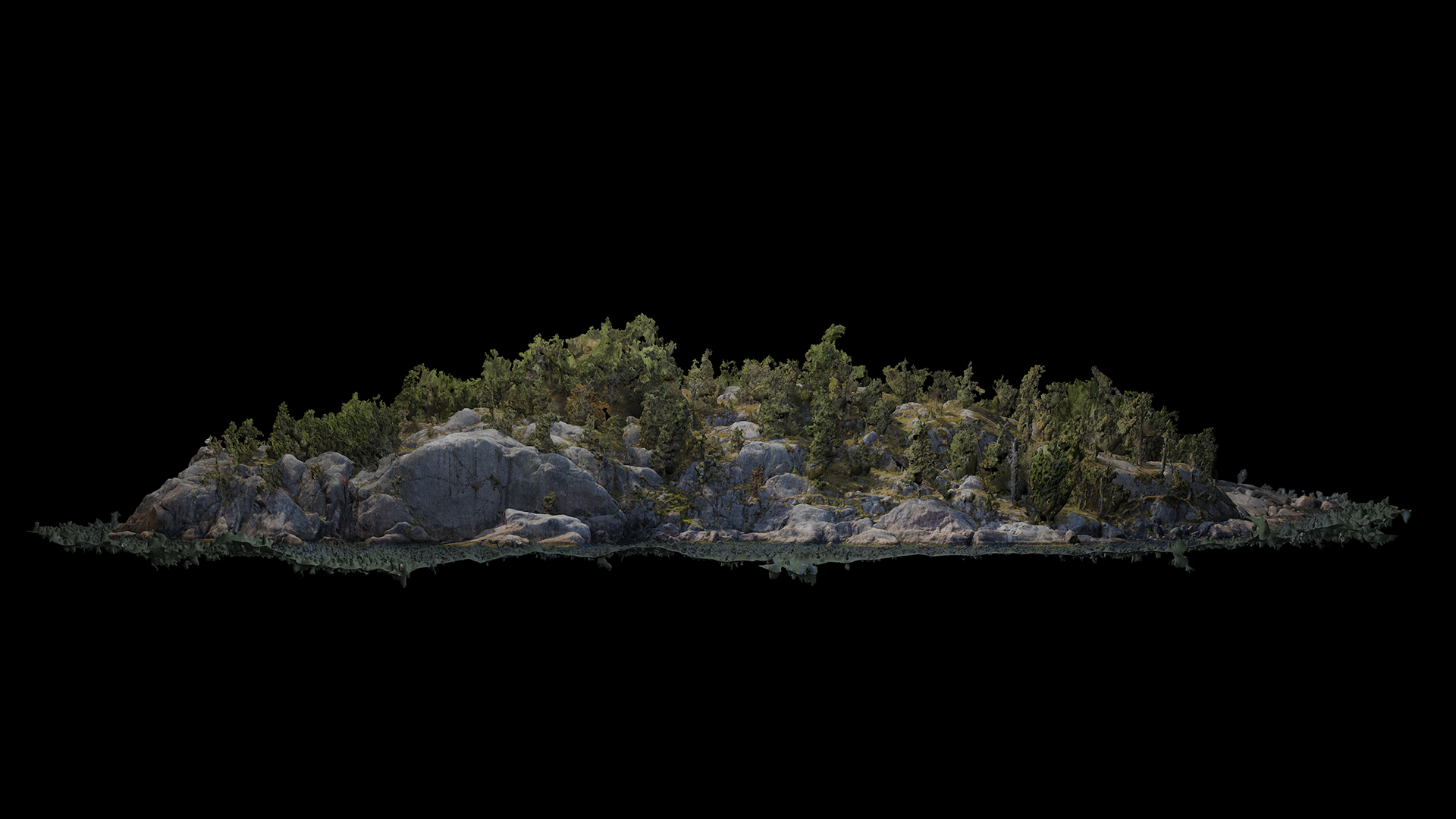

These videos creatively and critically explore the challenges of describing a landscape, an ecosystem, or the specter of environmental collapse through human language. The project further explores how language and vision are impacted by the mediating agency of new technologies. How do we see, feel, imagine, and talk about the environment in this post-digital era, when there are indeed non-human/machine agents similarly trained to perceive “natural” spaces? This project explores these questions, as well as emerging relationships with environmental surveillance, drone/computer vision, and A.I.

In the face of climate change, large-scale computer-controlled systems are being deployed to understand terrestrial systems. Artificial intelligence is used on a planetary scale to detect, analyze and manage landscapes. In the West, there is a great belief in ‘intelligent’ technology as a lifesaver. However, practice shows that the dominant AI systems lack the fundamental insights to act in an inclusive manner towards the complexity of ecological, social, and environmental issues. This, while the imaginative and artistic possibilities for the creation of non-human perspectives are often overlooked.

With the long-term research project and experimental films ‘Models for Environmental Literacy’, the artist Tivon Rice explores in a speculative manner how A.I.s could have alternative perceptions of an environment. Three distinct A.I.s were trained for the screenplay: the SCIENTIST, the PHILOSOPHER, and the AUTHOR.

The A.I.s each have their own personalities and are trained in literary work – from science fiction and eco-philosophy, to current intergovernmental reports on climate change. Rice brings them together for a series of conversations while they inhabit scenes from scanned natural environments. These virtual landscapes have been captured on several field trips that Rice undertook with FIBER(Amsterdam) and BioArt Society (Helsinki) over the past two years. ‘Models for Environmental Literacy’ invites the viewer to rethink the nature and application of artificial intelligence in the context of the environment.

Technically, the project employed custom Python code to fine-tune the GPT-2 language model on my textual datasets (eco-science, eco-philosophy, eco-fiction). This used the TensorFlow machine learning library, both to train and generate text from the models. Additional software was used for the video’s 3D photogrammetric modeling and animation.

Sound by Stelios Manousakis (NL/GR) & Stephanie Pan (NL/US), with voices by Esther Mugambi (AU/NL), Arnout Lems (NL), & Michaela Riener (AT/NL). This work was commissioned by FIBER for the 2020 festival and has been made possible with support from the Creative Industries Fund NL, Stroom Den Haag, Google Artists and Machine Intelligence, BioArt Society, and The University of Washington Department of Digital Art and Experimental Media.

The Future Vision Online Exhibition was available from 11 February to 11 March 2021.

What you find here is a fleeting glimpse of the 21 works – or in better words, 21 future visions – coming from artists, designers, researchers, professionals, and students who explored complex questions from a critical and creative perspective.

We extend our warmest thanks to everyone who participated in the PCD21.

View all works